In the rapidly evolving landscape of artificial intelligence, diffusion models have emerged as one of the most promising and effective approaches to generative modeling. These models are reshaping how AI systems generate images, text, and other complex data types, offering new possibilities for creativity, simulation, and problem-solving.

This article explores the fundamentals of diffusion models, their applications, and how they differ from traditional generative models like GANs (Generative Adversarial Networks). It also delves into the impact of diffusion models on various industries and their potential to drive future innovations.

What are Diffusion Models?

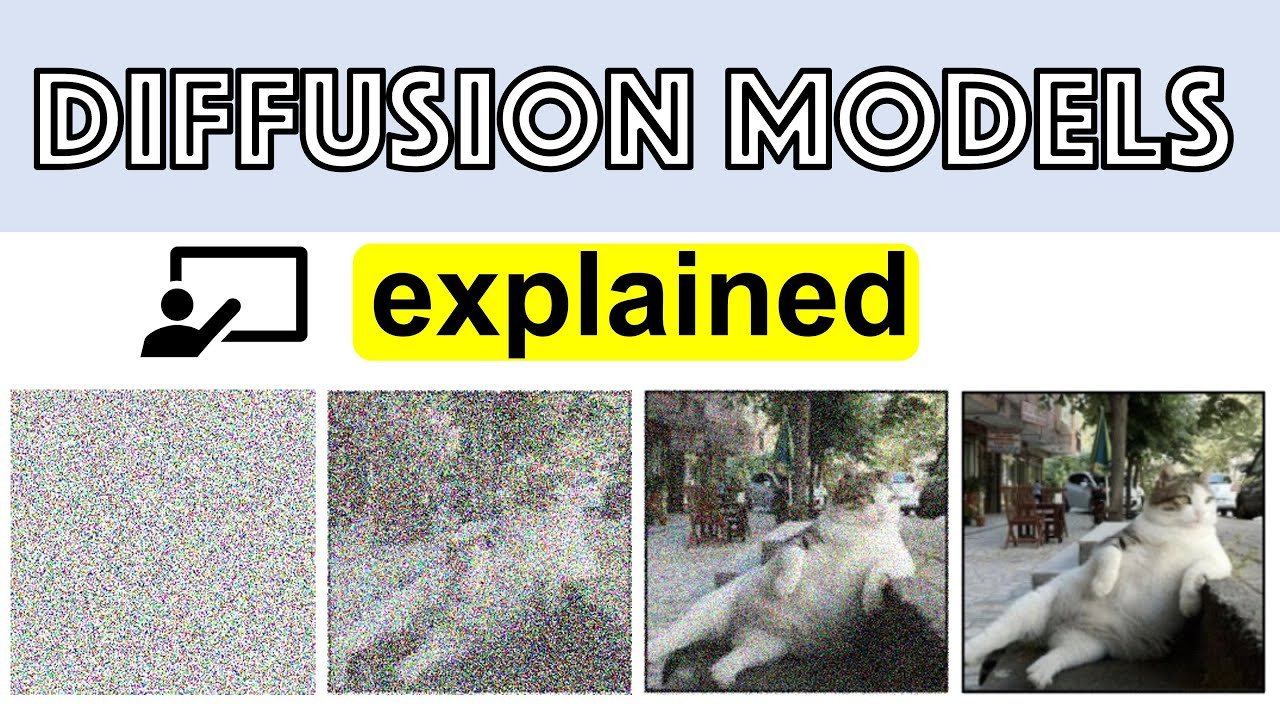

Diffusion models are a class of generative models that produce data by gradually transforming a simple distribution, such as Gaussian noise, into a complex data distribution. This process is typically modeled as a series of small, iterative steps, where noise is added or subtracted over time, leading to the gradual formation of a desired output, such as an image or a piece of text.

The idea behind diffusion models is inspired by physical processes where particles diffuse or spread out over time, such as the diffusion of ink in water. In the context of machine learning, diffusion models reverse this process, starting with noise and systematically refining it until it resembles the target data distribution.

How Do Diffusion Models Work?

At the core of diffusion models is a two-step process: forward diffusion and reverse diffusion.

Forward Diffusion:

- In the forward diffusion process, the model takes an input data point (e.g., an image) and gradually corrupts it by adding noise at each step. The result is a series of increasingly noisy versions of the original data, eventually turning it into pure noise.

- This process is usually designed so that each step of adding noise is small and incremental, allowing the model to learn how the data distribution evolves as it becomes more corrupted.

Reverse Diffusion:

- The reverse diffusion process is where the actual generative task occurs. Starting with pure noise, the model iteratively removes noise at each step, guided by the learned transformations from the forward diffusion process. This gradually refines the noisy input into a coherent and meaningful output that resembles the target data distribution.

- The reverse diffusion process is governed by a neural network, typically a deep neural network, which learns to predict how to reduce noise at each step to produce high-quality samples.

Key Advantages of Diffusion Models

Diffusion models have several advantages over traditional generative models, such as GANs and VAEs (Variational Autoencoders), which contribute to their growing popularity:

High-Quality Outputs:

- Diffusion models have demonstrated the ability to generate high-quality outputs, often surpassing the quality of samples produced by GANs. This is particularly evident in image generation tasks, where diffusion models have produced results that are nearly indistinguishable from real images.

Stability and Training Efficiency:

- One of the significant challenges with GANs is the instability of the training process, which can lead to issues like mode collapse. Diffusion models, on the other hand, are generally more stable during training, leading to more consistent results across different tasks.

- The iterative nature of diffusion models also allows for more controlled and gradual learning, reducing the risk of unstable behavior during training.

Diversity of Generated Samples:

- Diffusion models are less prone to mode collapse, a problem where generative models produce a limited variety of outputs despite being trained on diverse data. This means diffusion models can generate a wider range of samples, capturing the full diversity of the underlying data distribution.

Flexibility Across Domains:

- While diffusion models have been most notably successful in image generation, they are not limited to a single domain. These models can be adapted to generate other types of data, including text, audio, and even 3D structures, making them versatile tools in the field of AI.

Applications of Diffusion Models

The versatility and effectiveness of diffusion models have led to their adoption in various applications across different industries:

Image Generation and Editing:

- Diffusion models are increasingly used in creative fields for generating realistic images, artwork, and even photo editing. For example, they can be employed to create photorealistic images from text descriptions, opening up new possibilities in digital art and content creation.

- These models can also be used for image inpainting, where missing parts of an image are filled in realistically, or for style transfer, where the style of one image is applied to another.

Text Generation and Language Modeling:

- In natural language processing (NLP), diffusion models are being explored for tasks such as text generation, machine translation, and summarization. Their ability to model complex data distributions allows them to produce coherent and contextually appropriate text.

- These models can also be used for conditional text generation, where the model generates text based on specific prompts or conditions, making them useful for applications like chatbots and content generation.

Audio and Music Synthesis:

- Diffusion models are making strides in the field of audio synthesis, where they can generate high-quality sounds and music. For instance, they can be used to create realistic soundscapes, generate music in specific genres, or even design new sound effects for media production.

- The iterative nature of diffusion models allows for fine control over the synthesis process, leading to more nuanced and expressive audio outputs.

Scientific Simulations:

- In scientific research, diffusion models are being applied to simulate complex systems, such as molecular dynamics or climate models. Their ability to generate diverse and accurate simulations makes them valuable tools for researchers seeking to explore different scenarios or predict outcomes based on incomplete data.

- These models can also be used in materials science for tasks like predicting the properties of new materials or simulating chemical reactions.

Healthcare and Medicine:

- In the healthcare sector, diffusion models have potential applications in medical imaging, where they can generate high-resolution images from low-quality scans, assist in image reconstruction, or even generate synthetic data for training AI models without compromising patient privacy.

- These models can also be used to simulate biological processes or predict the outcomes of medical treatments, aiding in the development of personalized medicine.

Diffusion Models vs. GANs: A Comparison

While diffusion models and GANs are both popular tools for generative tasks, they differ in several key aspects:

Training Stability:

- GANs are known for their training instability, often requiring careful tuning and monitoring to avoid issues like mode collapse. Diffusion models, by contrast, tend to be more stable during training, as their iterative process allows for gradual learning and reduces the risk of catastrophic failures.

Sample Quality:

- While GANs have been the go-to model for generating high-quality images, diffusion models have started to outperform GANs in many cases, producing samples with fewer artifacts and more fine details.

Flexibility:

- GANs are typically designed for specific tasks, such as image generation, and require significant modifications to adapt to other domains. Diffusion models, on the other hand, are more flexible and can be applied to a wider range of data types and tasks without extensive changes to their architecture.

Mode Coverage:

- Diffusion models are better at capturing the full diversity of the data distribution, avoiding the mode collapse issue that can plague GANs. This means diffusion models can generate a wider variety of outputs, making them more versatile for applications that require diverse samples.

Challenges and Future Directions

Despite their many advantages, diffusion models also face challenges that researchers and developers are actively working to address:

Computational Complexity:

- One of the main drawbacks of diffusion models is their computational complexity. The iterative nature of these models requires a large number of steps to generate high-quality outputs, which can be time-consuming and resource-intensive.

- Ongoing research is focused on developing more efficient algorithms and architectures that can reduce the number of steps required or speed up the reverse diffusion process.

Scalability:

- While diffusion models can scale to handle large datasets, they may require significant computational resources to do so effectively. This can limit their accessibility to smaller organizations or individual researchers who lack access to high-performance computing infrastructure.

Interpretability:

- As with many deep learning models, diffusion models can be challenging to interpret. Understanding how these models make decisions and generate outputs is an area of active research, with the goal of making them more transparent and explainable.

Conclusion

Diffusion models represent a significant advancement in the field of generative AI, offering high-quality, stable, and versatile solutions for a wide range of applications. From image and text generation to scientific simulations and audio synthesis, these models are pushing the boundaries of what’s possible with AI.

As research continues to address the challenges of computational complexity and scalability, diffusion models are poised to become even more powerful and accessible, driving innovation across multiple industries. Whether you’re a researcher, developer, or content creator, diffusion models offer exciting new possibilities for harnessing the power of AI to generate complex and creative outputs.